by Danika Ciullo, Marketing Advisor

AI and ChatGPT (GPT-3)

It's Thursday night and 14-year-old Ethan has an essay due the next morning examining the plot and characters of a Charles Dickens book.

Total word count so far? 0.

But don't worry, poor Ethan is only a few clicks away from having an outline, intro, and closing paragraph.

Introducing – ChatGPT by OpenAI.

ChatGPT is a powerful Generative Pre-trained Transformer (GPT-3) autoregressive language model that can generate human-like text, including essays, by using deep learning.

However, the use of this technology and other AI comes with potential dangers that must be considered.

I'm not going to spend a whole article talking about this new technology because it's widely accessible and every single news source seems to have written at least two articles on it in the last week alone.

Ethical Implications of Artificial Intelligence

Companies will see an immediate use for something like this GPT-3 artificial intelligence tool with automated customer service responses, but the possibilities of AI in general go much deeper. You need to be prepared because growth can be exponential and move faster than expected.

Although AI has the potential to greatly benefit society in many ways, it also raises a number of ethical concerns.

Let’s take a look at some of the main issues around artificial intelligence.

AI causing bias and discrimination

AI systems can potentially perpetuate and even amplify societal biases, leading to unfair and discriminatory practices.

Additionally, if the data used to train an AI system is not diverse enough, it may not be able to accurately recognize or make decisions about individuals from underrepresented groups.

This could lead to unfair outcomes, such as denying loan applications or employment opportunities to qualified individuals based on their race, gender, or other protected characteristics.

A little rumour I have heard is worrying, too.

There's a claim that facial recognition technology has higher error rates for people with darker skin tones.

I dug into this a bit more and it seems that the statement isn't entirely valid. Many of the "studies" done are at least 5 years old now and technology is much more advanced.

Let's make sure the studies on AI are not biased before claiming the technology is biased. Bit of a paradox, hey?

Mitigating the issues mentioned though will take inclusive and balanced data sets during training and testing, though.

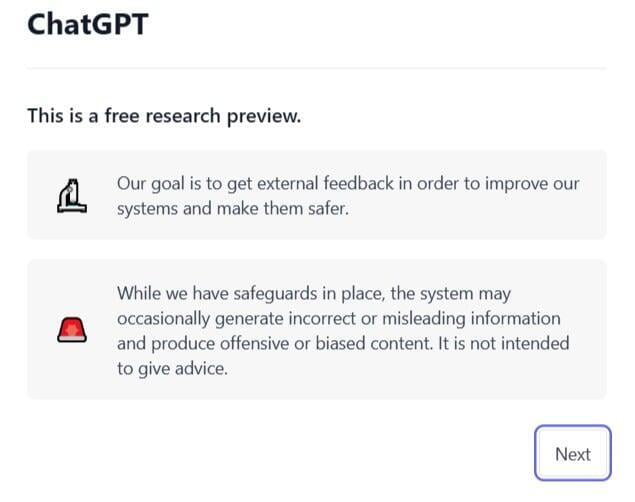

I'll leave you with this pop-up from ChatGPT acknowledging that artificial intelligence needs to learn not to be biased:

Privacy and security

AI systems can collect and analyze large amounts of personal data, which raises concerns about data privacy and security.

The use of AI in areas such as healthcare, finance, and surveillance can lead to the collection of sensitive personal information, such as medical records, financial transactions, and location data.

This information, if mishandled, can lead to identity theft, financial fraud, and other forms of personal harm.

AI systems can be vulnerable to hacking too and other forms of cyber attacks, which can compromise the security of personal data.

We don’t want malicious actors manipulating AI systems!

Job displacement caused by AI

As AI systems become more advanced, they may automate tasks that were previously done by humans, leading to job displacement. It seems all sunshine for most of us, but it can adversely affect a large number of people that don't have a backup or easy replacement for another income stream.

We could see increased income inequality, and large-scale disruption would have a major effect on the entire economy causing social and political instability.

Who would be responsible in these cases? Does the employer have a right to try and upskill employees or help with their next job?

I argue there is an ethical responsibility to avoid mass-scale layoffs with no preparation.

Proper regulations on any support, benefits, job placement assistance, and unemployment would be needed.

Autonomous weapons paired with AI

There are fears AI systems could make decisions on their own to use deadly force, without human oversight or control – especially if paired with physical machinery.

Unintended consequences could mean targeting civilians or violating international laws and norms.

Therefore, there is a need for international regulations and controls to ensure that the development and deployment of autonomous weapons are done responsibly and ethically.

From my perspective, this should be high on the priority list of AI issues.

Responsibility of AI behaviour

As AI systems become more autonomous, it can be difficult to determine who is responsible for their actions.

Tesla's self-driving cars caused a stir last year with some of the accidents they caused, and that seems pretty clear cut it would be on them to take the fall.

But what about a more "thinking" machine that could provide guidance or tell someone what to do?

It seems easy to say now that the company that makes the AI is 100% responsible for all actions or outputs an AI gives in the future, but I have another argument to play devil's advocate.

When we think about cars, is it the car owner/user that is liable for misuse or the maker of the car?

And this is just considering AI or tools that humans directly interact with. The operators are just as responsible as the creators of the AI.

We can't have AI out causing harm to individuals or society.

AI and Consciousness

Currently, no clear definition exists of what constitutes consciousness, making it difficult to say if an AI system one day is truly conscious.

I think we are a way off from that happening, but you never know!

The question would be what we do to protect an AI that becomes conscious. What are the obligations and what is its moral status?

My first thought would then be not to exploit this independently-thinking being - it deserves its own set of rights.

Would the responsibility then change from the creators to falling on the AI itself? Arguments are already being made that it's possibly not ethical to even create an AI that could become conscious.

Again, guidelines need to be created and agreed upon.

Plagiarism using AI

ChatGPT can easily generate text that is similar to existing content, making it easy for users to pass off the generated text as their own work – plagiarism! This can be especially problematic in academic settings, where plagiarism can result in serious consequences for students.

Luckily it seems schools are gearing up for this and finding ways to detect if AI was used to write something (such as this plagiarism detection tool).

I also know my teachers would be able to tell if I wrote something or my brother for example! They're great at picking up an author's voice.

It's important to keep in mind that plagiarism checkers and text comparisons are not foolproof and may not identify all instances of AI-generated text. Schools need multiple methods for teachers to employ.

AI-created Art

There's also been a major increase in AI-generated art. Questions are being raised about issues of authorship and originality, as well as the role of AI in the creative process.

On one hand, AI-generated art can be seen as a form of collaboration between humans and machines, potentially leading to new and exciting forms of artistic expression (similar to when film and video developed).

On the other hand, there are concerns that AI-generated art can blur the lines between the two and could potentially devalue the work of human artists.

Additionally, there are concerns about whether AI-generated art should be considered original. Should creators of the AI be noted as authors?

Dealing with Ethical Guidelines in AI

To mitigate these ethical concerns, it is important for artificial intelligence researchers, developers, and policymakers to work together to ensure that AI systems are developed and used responsibly.

This includes designing AI systems with fairness, transparency, and accountability in mind, as well as developing guidelines and regulations for the use of AI.

The key points above are good indicators of what you might include as it relates to your business specifically but is not an inclusive list.

Luckily, there are frameworks and conversations already started.

The first place for New Zealand businesses to tune into would be the major non-governmental organisation AI Forum and their publications, articles, and resources – particularly the roadmap they created for AI in New Zealand and the Trustworthy AI in Aotearoa: AI Principles PDF.

AI Forum makes the distinct point though that AI “does not exist in a legal vacuum” and these principles sit alongside existing laws and regulations, including in NZ Te Tiriti o Waitangi.

The NZ government isn’t necessarily proactive about adding specific AI regulations though, and instead updates legislation to include AI when it gets reviewed or created. This is, in part, because it’s not particularly easy to define or govern it.

I did find that the Data Ethics Advisory Group houses four members currently and is a contributing force to reviewing policy, proposals and ideas related to new and emerging uses of data in our government. Their expertise stretches across:

- privacy and human rights law

- ethics

- innovative data use and data analytics

- Te Ao Māori

- technology

- public policy and government interests in the use of data.

The only problem? They haven’t had a published agenda since December 2020, and apparently haven’t met since then.

… But don’t forget about the opportunities!

The reason so many are calling for more regulation and ethical consideration around AI is simply because it’s incredibly powerful.

So yes, it needs to be used correctly. But there are also huge opportunities for both individuals and businesses to AI adoption.

If you’re unsure how AI fits in with your current model or want to review the way your business operates with tech, give us a shout. Strategic business reviews are our bread-and-butter.

Oh, and about 85% of the above article was written by ChatGPT except the last few closing paragraphs. Pretty neat stuff!

Danika Ciullo is a Marketing Advisor for CIO Studio and is thrilled at the advances in AI and the efficiencies it offers. If you want to chat about AI or digital for your business operations, reach out to one of our core advisors.

Get industry updates, tech news, and CIO Studio blogs free to your inbox!